Search Disrupted Newsletter (Issue 24)

Anthropic pays record $1.5B copyright settlement, GPT-5's 'Research Goblin' changes mobile research, Google expands AI globally, and publishers unite against AI scraping.

Anthropic pays record $1.5 billion copyright settlement

It feels like a real milestone that Anthropic (Claude) just agreed to pay a massive $1.5 billion settlement to 500,000 authors for copyright violations.

It’s not quite a referendum on what constitutes “fair use” in the context of AI model training, as the underlying issue is that the authors’ works were obtained illegally via a pirate book download site.

The settlement (which is still somewhat in dispute) has each author getting $3,000 per work, and Anthropic must delete illegally downloaded works and affirm they didn’t use pirated content in their public AI technologies.

The authors’ lawyer called this a “powerful message to AI companies and creators alike that taking copyrighted works from these pirate websites is wrong.”

Many of the CMOs, content and search marketers I talk with weekly still steeple their fingers and ask gravely, “what are these AI companies doing with our data?”, and there are two answers to that:

From an abstract computer science perspective, they’re just looking for patterns of speech and raw data (developing towards artificial general intelligence or building a model of the world). Building something more like a search engine that answers questions for people, refers them to your site, recommends you or your competitors, etc.

I’m still really mixed on this. I personally know several authors who are quite legitimately upset that their hard work was, in their minds, “sucked up for who knows what by a bunch of greedy tech bros”.

But I’m also aware of how thin the line is between the two uses outlined above. Anthropic has quite publicly and indisputably made great pains to make sure that it’s not generally possible to just get a book regurgitated to you out of Claude, and most authors seem quite ok to have their book recommended to new readers from the AI services.

The real question: Will this make AI companies more hesitant about how they collect their training data, making it harder for search marketers?

Link: Anthropic Will Pay Record-Breaking $1.5 Billion settlement

GPT-5’s “Research Goblin” is changing mobile research

Simon Willison just coined the perfect term for GPT-5’s new search capabilities: the “Research Goblin”.

I appreciate this as it captures the industrious but not entirely trustworthy nature of the AI search agents.

He highlights what I see as the true growing search experience: hastily constructed one-off queries spoken into a phone that kick off a massive effort (by in this case GPT-5) resulting in a comprehensive and customized “report”.

Willison’s recommendation: Use hints like “go deep” to trigger more thorough research, and be experimental with your queries.

As a search marketer, you may be unfamiliar with Simon’s writings (and potentially even put off by the very 90s aesthetic of his site), but over the last couple of years, he’s proven to be very keen at predicting where and how AI will be used in everyday life.

There’s a writing technique of focusing as if you were writing to a specific individual, this morphed over time to crafting content to be read by Google, and I genuinely think that the new strategy is going to be writing for the “Research Goblin”, an AI intermediary between user searches and your site.

Link: Research Goblin Analysis

Google admits “the open web is in rapid decline”

Google admitted that “the open web is already in rapid decline,” despite months of publicly maintaining that the web is “thriving.”

This admission came in a filing related to Google’s antitrust case, where the company argued that breaking up its advertising business would “only accelerate” this decline.

Google’s contradiction is remarkable. CEO Sundar Pichai recently claimed they’re “definitely sending traffic to a wider range of sources” after AI search rollouts. VP Nick Fox said “the web is thriving.”

But the reality is that many (most?) of Google’s informational searches are being answered in AI overviews and this is 100% cutting into the overall amount of traffic that lands on sites.

Sidenote: I often get really frustrated with Google’s imprecise way of describing clicks/traffic as they often hide behind “quality” indicators to couch their statements.

But in court, facing potential antitrust action, where words actually matter, Google’s tune changed completely.

Google spokesperson Jackie Berté tried to walk this back, claiming they were only referring to “open-web display advertising,” not the open web as a whole. But the filing’s language is clear.

The implications are massive. If Google itself admits the open web is in decline, we’re looking at a fundamental restructuring of how online content and commerce work.

Link: Google Admits Open Web Decline

Google expands AI Mode beyond English for first time

Google just rolled out AI Mode (which may soon become the default search experience) in five new languages: Hindi, Indonesian, Japanese, Korean, and Brazilian Portuguese. This is the first expansion beyond English since AI Mode launched and will definitely not be the last.

Google emphasized that “building a truly global Search goes far beyond translation,” using Gemini 2.5 Pro’s “advanced multimodal and reasoning capabilities” to make results “locally relevant and useful.”

Simultaneously, the Gemini app now accepts audio files (the #1 user request), with free users getting 10 minutes of audio and five daily prompts, while Pro and Ultra users can upload up to three hours.

NotebookLM also got a major update, creating reports in over 80 languages in various formats: study guides, briefing docs, blog posts, flashcards, and quizzes with customizable structure, tone, and style.

For global search marketers, this is the starting gun for true multilingual AI search competition. These aren’t just translated results - they’re culturally adapted AI responses that understand local context.

If you’re doing international SEO, you need to start thinking about how your content performs in AI search across these languages and regions. The old model of “translate and optimize” won’t work when AI is providing synthesized, culturally relevant answers.

Link: Google Expands AI Mode Languages

Real Simple Licensing - new AI indexing guidelines

Reddit, Yahoo, Medium, and other major platforms are adopting a new “Really Simple Licensing” (RSL) standard to get compensated for AI content indexing.

I’m very deliberately choosing to use the word indexing here instead of scraping, as I feel like this is one of those linguistic PR tactics that tries to paint AI search as something distinct from what Google and Bing bots have been doing for decades.

The RSL standard adds licensing terms to robots.txt, offering options like free usage, attribution, subscription, pay-per-crawl, and pay-per-inference (paying only when content is used to generate responses).

The RSL Collective, a new nonprofit managing the standard, aims to “establish fair market prices and strengthen negotiation leverage for publishers,” drawing inspiration from music royalty nonprofits like ASCAP and BMI.

Reddit CEO Steve Huffman called it “an important step toward protecting the open web.”

This is potentially huge for content creators. Instead of hoping AI companies will voluntarily pay for training data, publishers are creating a standardized system for licensing and compensation.

The question is enforcement. Will AI companies respect these licensing terms, or will this become another unenforceable standard?

My intuition is that this is going to create a two-tier system:

Expensive licensed content that gets skipped in AI indexing as it’s a sign of potentially litigious data source (aka the Anthropic settlement), and the source that becomes increasingly irrelevant. Unlicensed content gets included and ends up becoming much more influential as a result.

Link: RSL Collective

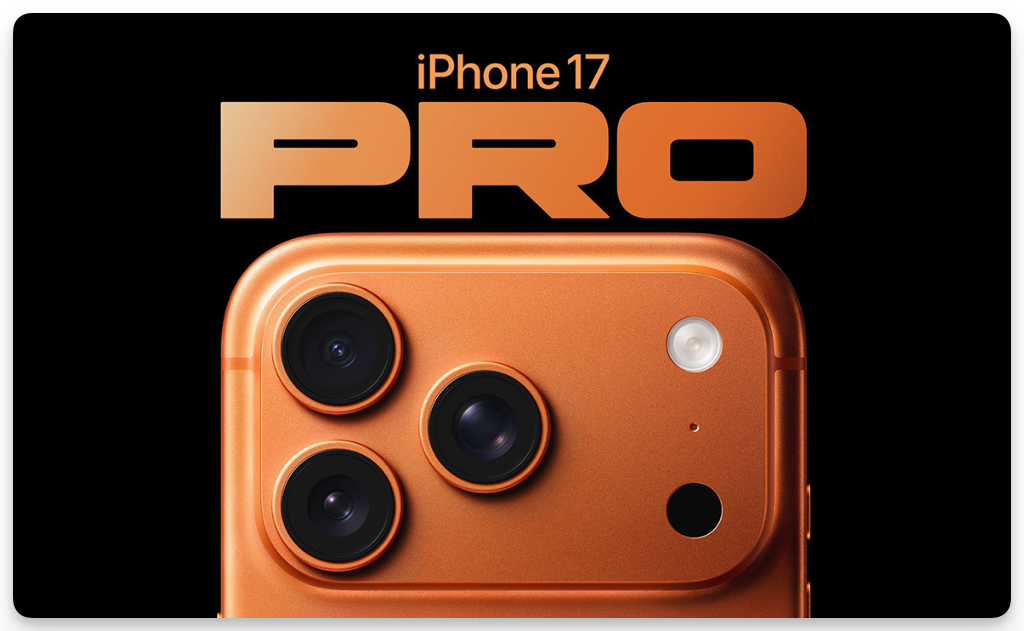

Apple’s A19 Pro brings GPU acceleration that could revolutionize local LLMs

Apple’s new A19 Pro chip includes a game-changing feature that has mostly flown under the radar: matmul acceleration in its GPU, equivalent to Nvidia’s Tensor cores.

I basically won’t shut up that I think that local inference on AI models is the future, and it’s unsung underlying technical changes like that really pave the way.

We’re still in the “strung together and barely works” stage of AI, where most of the actual computing hardware that exists in the world can’t really take advantage of it.

But this is changing; currently, Macs excel at running large language models thanks to their high memory bandwidth and VRAM capacity, but they struggle with prompt processing speeds. While a Mac might technically be able to run a large language model, it’s typically painfully slow.

The A19 Pro’s GPU acceleration directly addresses this bottleneck. Matrix multiplication is the core computational operation in transformer models, and hardware acceleration makes these calculations dramatically faster.

If (more likely when) Apple brings this GPU upgrade to the M5 generation (which seems likely), we’re looking at the dawn of truly viable local LLM inference on consumer hardware. This could shift the entire AI landscape away from cloud-dependent models toward local processing.

For search marketers, this has massive implications. Local LLMs mean users could run sophisticated AI analysis without internet connections, potentially bypassing traditional search engines entirely. Content strategies may need to account for AI models that never “phone home” to report what users are researching.

The era of powerful, private, local AI inference might be closer than we think.

Link: Apple A19 Pro Specifications

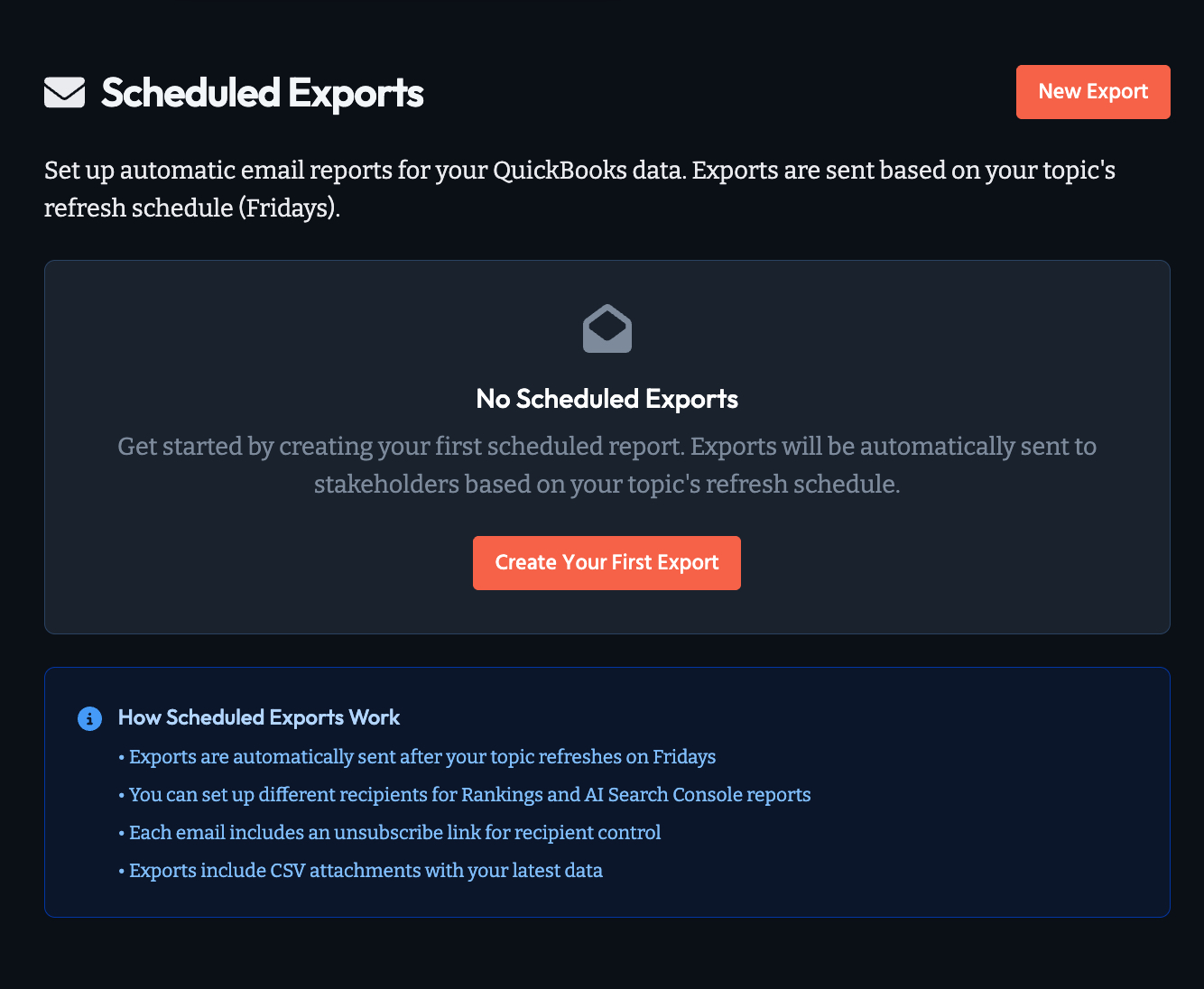

New: Knowatoa Scheduled CSV Exports

We’ve just launched a feature that makes staying on top of your AI search performance effortless: Scheduled CSV Exports.

Instead of manually generating weekly reports, you can now set up automatic email delivery of your complete AI search rankings data. The system sends comprehensive CSV files with all your performance metrics directly to your inbox, your team’s, or an external reporting tool like NinjaCat on whatever schedule works best for your reporting needs.

This is particularly valuable for agencies managing multiple clients - you can set up automatic monthly reports for stakeholders, or configure weekly internal updates to monitor performance trends across your entire portfolio.

The CSV format makes it easy to import into Excel, Google Sheets, or business intelligence tools for deeper analysis. Many reporting platforms like Ninjacat even have native connectors that can automatically ingest CSV email attachments.

Setting it up takes less than a minute: navigate to any of your topics, click “Scheduled Exports” in the sidebar, enter an email address, and choose your data type. The first export gets sent immediately so you can see exactly what you’ll receive.

Link: Learn More About Scheduled Exports

Thanks

Genuine thanks to all the authors out there.

It’s hard to create new things in general, and I think it’s particularly hard right now, and I’m very grateful for people putting new things into the world.

p.s. It would really help me out if you could Follow me on LinkedIn